Project information

- Category: Text Summarization (Generative)

- Project date: 8 December, 2023

- Project URL: : Project Link

- Paper: Link

Project Description

- Objective: Automatically generating concise and informative headlines from extensive news articles, employing various advanced NLP models and techniques to summarize content effectively

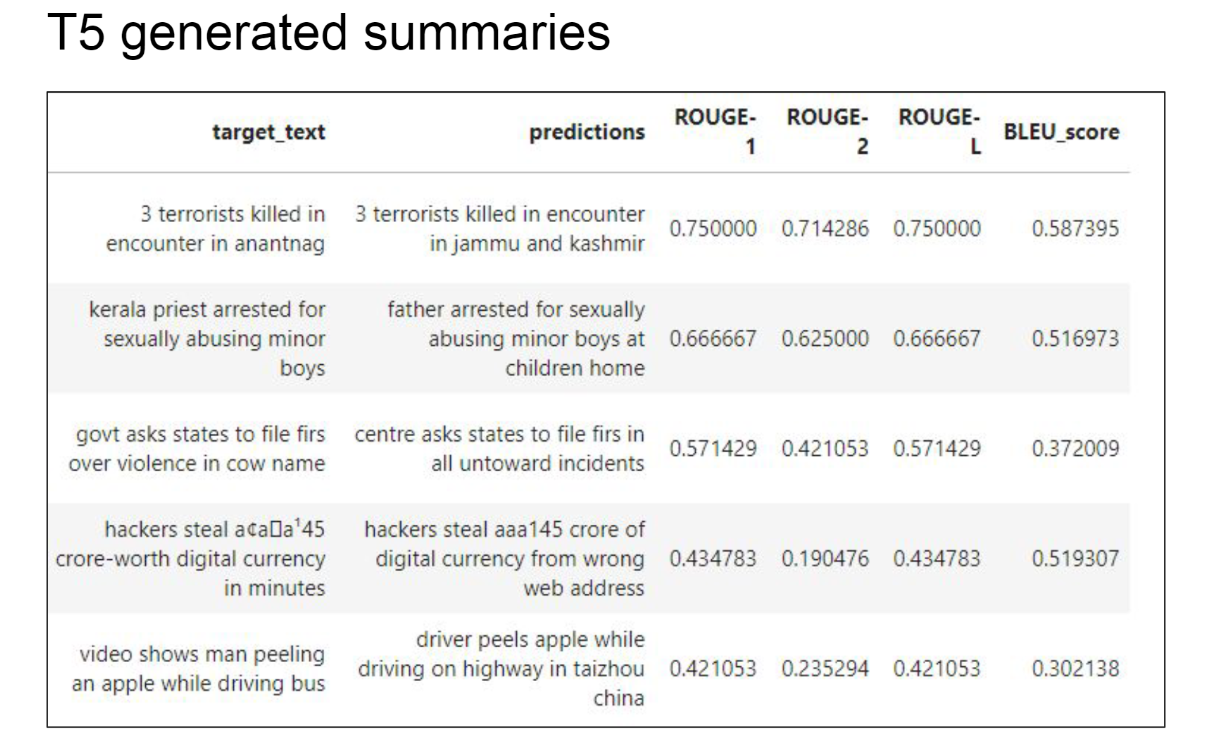

- Conducted training sessions with limited data to enable T5 for headline generation, capitalizing on its unique architecture.

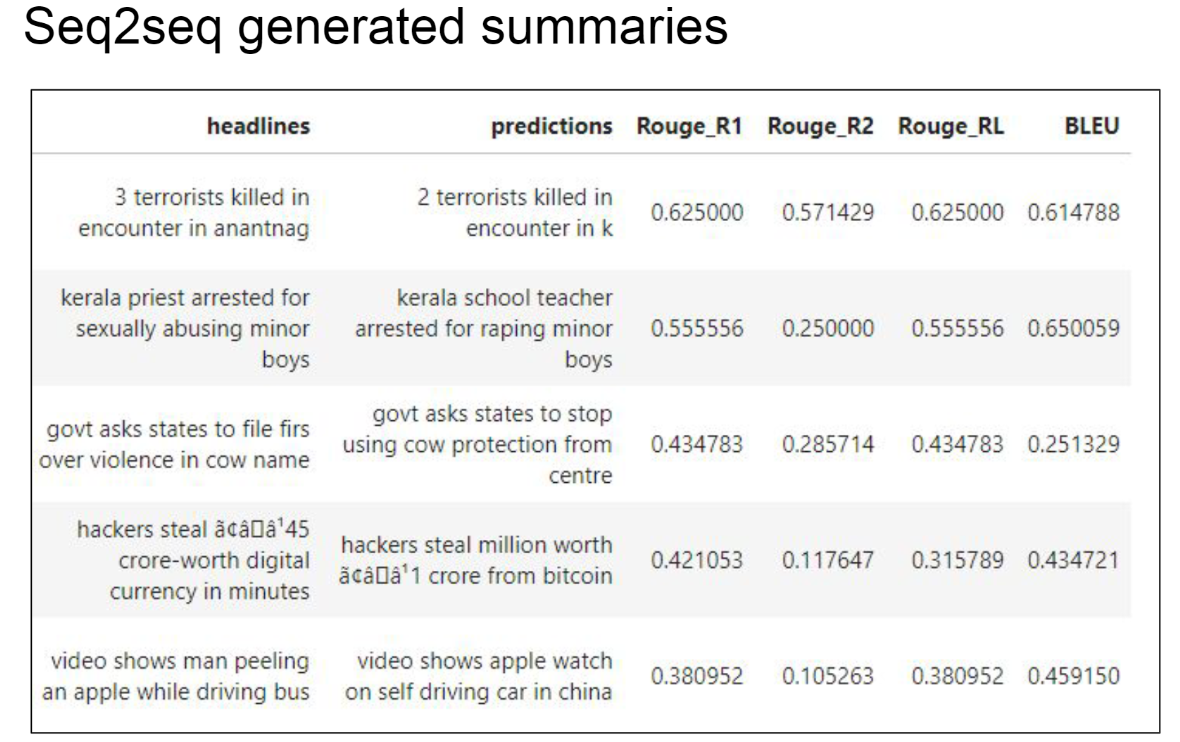

- Employed T5, Bert, and Seq2Seq models, inspired by the 2019 ACL paper, for Text Summarization, generating article headlines by leveraging distinct approaches

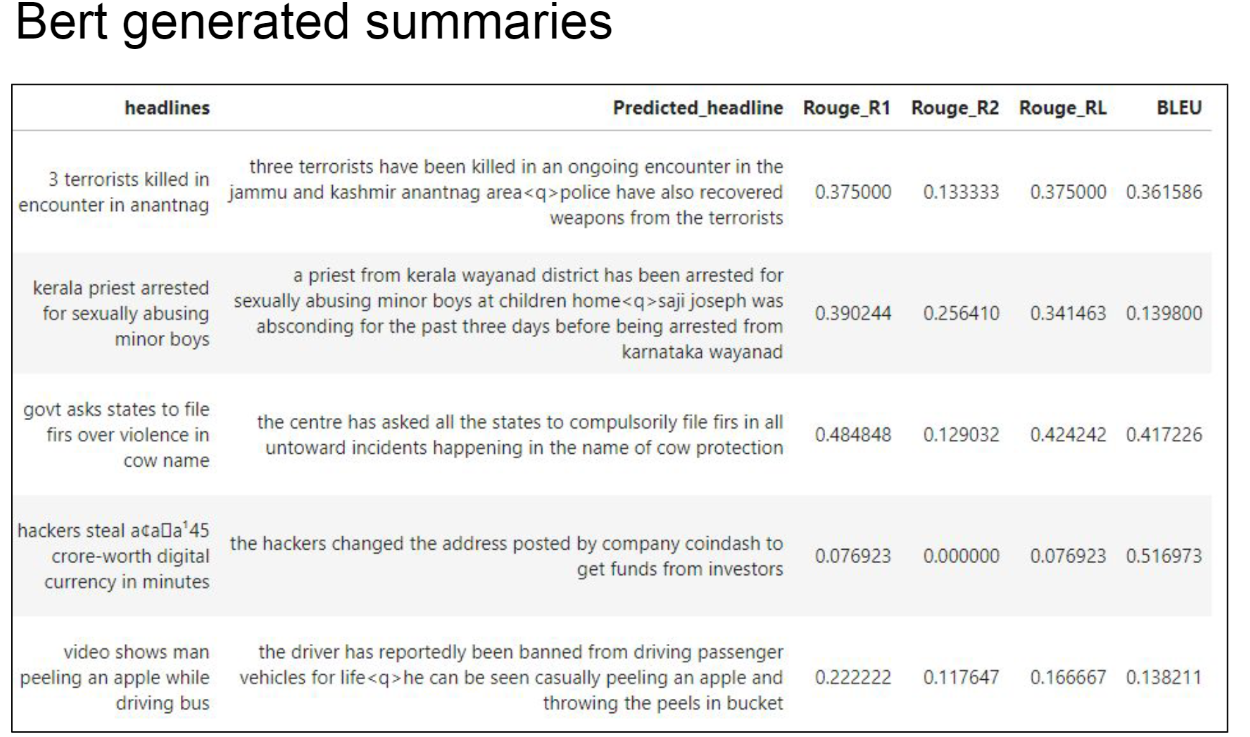

- Utilized pre-trained BERT models specified in the "Text Summarization with pre-trained encoders" paper to produce text summaries, harnessing their established capabilities.

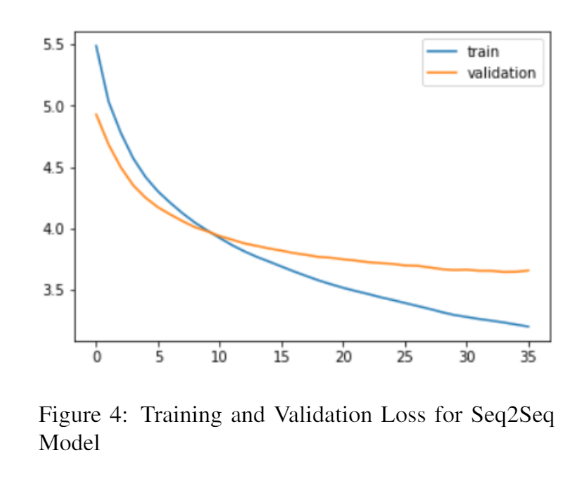

- Developed and trained the Seq2Seq model from the ground up, enabling comprehensive understanding and control over its training process and outcomes.

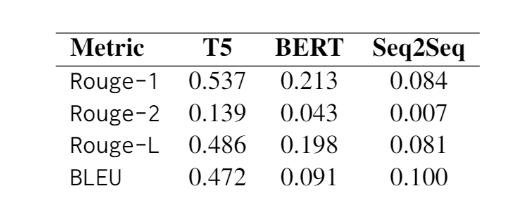

- Conducted a thorough comparative analysis of the models' performances, evaluating against Blue score and Rouge score metrics, with detailed findings consolidated in a comprehensive research paper.